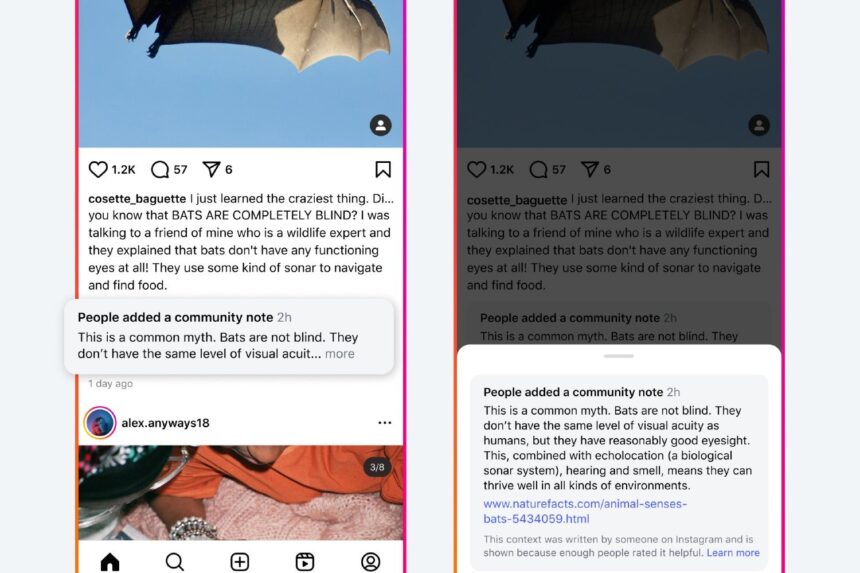

Earlier this year, Mark Zuckerberg said that, effectively, Meta was getting out of the ‘caring about the truth’ business and ditching third-party fact-checkers. Now it’s handing over the keys to its users. Meta announced that it will start to roll out a Community Notes feature, similar to the one on X, across its major social platforms.

Facebook, Instagram, and Threads will start to see community-written and rated fact checks appear on content—eventually. The test run for the feature will begin on March 18 and will focus on the note writing and ranking to work out the kinks before anything gets published. Meta claims that around 200,000 people have already signed up to a waitlist to join the pool of contributors for Community Notes, and it’ll start letting them in slowly through a random selection process as the feature gets introduced. The first languages approved for notes include English, Spanish, Chinese, Vietnamese, French, and Portuguese.

According to the company, it’ll initially use the same open-source algorithm that is already used by X, with ratings taking into consideration the rating history of each user to help determine which notes will appear on the platform. Eligible users must have a Meta account that is at least six months old, in good standing, and has a verified phone number attached to the account with two-factor authentication activated.

Meta says that for a note to actually make it to the platform, it’ll have to receive approval from users with a “range of viewpoints” and, no matter how many contributors agree on a note, it’ll only get published if people who “normally disagree” say it is helpful. All notes will be capped at 500 characters and will be required to include a link that supports the content in the note.

Here’s the thing with community notes: It can actually work. A study from the University of Illinois Urbana-Champaign found that users on X were more willing to retract false posts in response to notes, and a study in PNAS Nexus last year found that community notes were perceived by users as significantly more trustworthy than misinformation flags or notes from third-party fact-checkers.

The problem with the community notes system is that it often turns into a platform for meta-arguments, brigading, and gamification that can keep actual useful information from ever seeing the light of day. A study by Spanish fact-checking site Maldita found that just 8.3% of proposed notes on X actually get published under a post. That’s in part why—along with a premium model that incentivizes clickbait—there is more disinformation and hateful trash on X than there used to be, despite any shame that might be induced by getting Noted.

Maybe Meta will be able to thread the needle and achieve a level of trustworthiness while also successfully scaling community notes to apply to the massive amount of content that gets posted across the company’s platforms every day. Or maybe it’s just a way to turn users into free labor while cutting costs. Who can say for sure? Maybe if we all vote on it, we’ll get to the truth.

Read the full article here