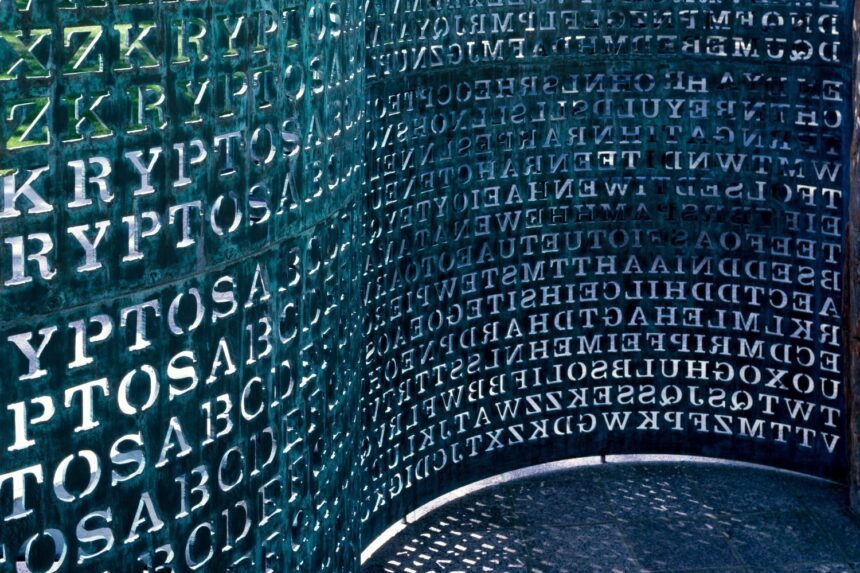

Near the CIA headquarters in Langley, Virginia, there is a sculpture known as Kryptos. It has been there since 1990 and contains four secret codes—three of which have been solved. The final one has gone 35 years without being decrypted. And, according to a report from Wired, the sculptor responsible wants everyone to know that you are not solving the damn thing with a chatbot.

Jim Sanborn, who has created sculptures for the Massachusetts Institute of Technology and the National Oceanic and Atmospheric Administration in addition to his work outside CIA HQ, has reportedly been inundated with people who are absolutely positive they’ve solved K4, the final panel of unsolved code that he encoded with knottier techniques. But these code crackers aren’t the actual cryptanalysts, professional or otherwise, who have been obsessed with decoding the message since it first appeared. No, these are just some people who ran the code through a chatbot and have taken its word on the answer.

In a conversation with Wired, Sanborn said that he’s seen a significant uptick in submissions, which is already annoying if you’re already a 79-year-old who has gotten so many submissions over the last three in a half decades that you had to start charging $50 fee for reviewing solutions because you’d had so many cranks message you over the years. But worse than just the frequency of submissions, according to Sanborn, is the attitude of the submitters.

“The character of the emails is different—the people that did their code crack with AI are totally convinced that they cracked Kryptos during breakfast,” he told Wired. “So they all are very convinced that by the time they reach me, they’ve cracked it.”

Here are just a few samples of the extremely arrogant and self-satisfied messages that Sanborn has received in recent years:

“I’m just a vet…Cracked it in days with Grok 3.”

“What took 35 years and even the NSA with all their resources could not do I was able to do in only 3 hours before I even had my morning coffee.”

“History’s rewritten…no errors 100% cracked.”

If you’ve spent any amount of time on social media, particularly on X, you’ve seen these people. Maybe not these same guys, but the same type of guy. You know, the ones who say “Just Grok it” or reply to someone’s post with “Here’s what Grok says,” or share a screenshot of ChatGPT’s response as if that is in any way additive to the conversation.

The smugness is, frankly, inexplicable. Even if they did successfully crack Sanborn’s code using AI (which, for the record, Sanborn says they haven’t even gotten close), what is it about asking a machine to do the work for you that generates such self-satisfaction? It’d be one thing if they trained a large-language model on an endless amount of encryption knowledge and used that to crack Sanborn’s code. But they’re literally just asking a chatbot to look at a picture and solve it. It’s the least clever thing imaginable. It’s flipping to the back of the textbook to see what the correct solution to the math equation is, except in this case, the textbook has confidently hallucinated the answer.

This behavior isn’t uncommon, really. A study published last year in the journal Computers in Human Behavior found that when people learn advice was generated by AI, they tend to over-rely on it, even allowing it to convince them to go against conflicting contextual information and their own personal interests. The same study found that when someone over-relies on AI advice, it negatively affects their interactions with other humans. Maybe it’s because they’re so damn pleased with themselves.

Read the full article here